(**) Translated with www.DeepL.com/Translator

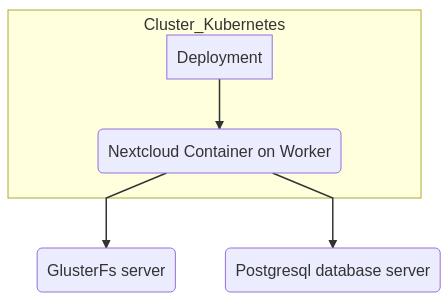

This part will consist in migrating my existing Nextcloud server to the Kubernetes cluster (k8s).

The first thing to check: Is there an official Nextcloud container for “Arm64” platforms?

The repository does exist, maintained by the (official) Nextcloud community and available for Arm64. (Docker Registry).

Context and Architecture

Architecture

- Cluster Kubernetes (ARM64)

- GlusterFS server (ARM64)

- Postgres Database Server (ARM64)

Context

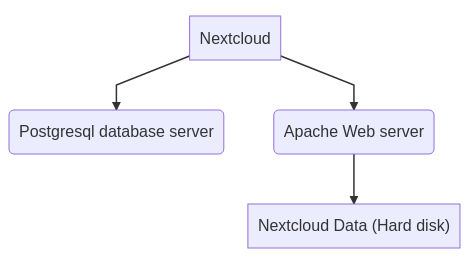

I already have a NextCloud installation on my Datacenter, distributed on a Postgres server, an Apache/PhP web server and the NextCloud data on an SSD disk.

Nextcloud data will be migrated to GlusterFS server (see install GlusterFS server). Postgres data remains on the same server :

It is important to make a backup of the entire Nextcloud set before migrating to Kubernetes.

Preparing for deployment

We will prepare the deployment of Nextcloud in Kubernetes according to the specifications provided by the container documentation. “Nextcloud-Docker”. Reminder: I’m starting from an existing production configuration

At the time of writing this documentation, the specifications of “volumes” for Nextcloud are :

- /var/www/html Main folder, needed for updating

- /var/www/html/custom_apps installed / modified apps

- /var/www/html/config local configuration

- /var/www/html/data the actual data of your Nextcloud

- /var/www/html/themes/<YOUR_CUSTOM_THEME> theming/branding

My department is concerned by : config , custom_apps and data, I don’t use specific themes.

Preparation of volumes

GlusterFS server

so I’m going to create three GlusterFS volumes :

- nextcloud-config

- nextcloud-data

- nextcloud-apps

On the GlusterFS server, my volumes will be stored under “/Disk1/gfs-nextcloud”, I create and start the necessary gluster volumes :

BASEGFS="/Disk1/gfs-nextcloud"

mkdir $BASEGFS

VOLUMES="config data apps"

for VOLUME in $VOLUMES

do

# Create dir

mkdir $BASEGFS/$VOLUME

# Create volume

gluster volume create nextcloud-$VOLUME $(hostname):/$BASEGFS/$VOLUME

# Start volume

gluster volume start nextcloud-$VOLUME

done

Gluster Server Firewall: Adding a volume to Gluster domain, also means to change firewall rules of “Gluster Server” (1 exported volume = 1 new port), see doc install GlusterFS server

Nextcloud server (the one with the data to migrate)

I mount the gluster volumes, this allows to check the proper functioning of the Gluster :

mount -t glusterfs [gluster host]:/[volume name], for example :

# Mount the nextcloud-config volume on /mnt of the Nextcloud server, you may need to install the gluster client beforehand : `apt -y install glusterfs-client`

mount -t glusterfs gluster.mydomaine:/nextcloud-config /mnt

# To unmount

umount /mnt

Once mounted, and depending on the volume, copy the necessary content, repeat the operation for each volume :

- the content of “config” of the existing nextcloud installation in the Gluster volume: nextcloud-config

- the “data” content of the existing nextcloud installation in the Gluster volume: nextcloud-data

- the content of “apps” of the existing nextcloud installation, without the default apps delivered, in the Gluster volume: nextcloud-apps

ATTENTION for volume apps : Here is the list of nextcloud (core) apps present by default :

LIST="accessibility activity admin_audit cloud_federation_api comments contactsinteraction dashboard dav encryption federatedfilesharing federation files files_external files_pdfviewer files_rightclick files_sharing files_trashbin files_versions files_videoplayer firstrunwizard logreader lookup_server_connector nextcloud_announcements notifications oauth2 password_policy photos privacy provisioning_api recommendations serverinfo settings sharebymail support survey_client systemtags text theming twofactor_backupcodes updatenotification user_ldap user_status viewer weather_status workflowengine"

WARNING This list can change at any time depending on the version you are migrating to… The simplest way is to copy the apps content into the “nextcloud-apps” volume and delete the directories mentioned above.

LIST="[valeur précédemment citée"

cd [Votre volume gluster]

for rep in $LIST

do

rm -rf "$rep"

done

WARNING If you copy data directly to the directory of the Gluster server, without using a Gluster client, you will face a synchronization problem between what is arranged on the gluster server and what the clients “see”. See the solution at the end of this procedure

Automation

Your active nextcloud service is installed in “/var/www/nextcloud”, the names of Gluster volumes conform to the above, rsync and the glusterfs client are installed :

On the active nextcloud server We will proceed with the active data transfer to the GlusterFS server :

# Stop the nextcloud service

systemctl stop apache2

NEXTCLOUDPLACE="/var/www/nextcloud"

HOSTNAMEGLUSTER="gluster.server" # or gluster server IP address

VOLUMES="config data apps"

# To be sure

umount /mnt

for V in $VOLUMES

do

mount -t glusterfs "$HOSTNAMEGLUSTER:/nextcloud-$V /mnt

rsync -ravH "$NEXTCLOUDPLACE/$V/ /mnt/

## Remove apps directory "non custom"

if [ "$V" == "apps" ];then

LIST="accessibility activity admin_audit cloud_federation_api comments contactsinteraction dashboard dav encryption federatedfilesharing federation files files_external files_pdfviewer files_rightclick files_sharing files_trashbin files_versions files_videoplayer firstrunwizard logreader lookup_server_connector nextcloud_announcements notifications oauth2 password_policy photos privacy provisioning_api recommendations serverinfo settings sharebymail support survey_client systemtags text theming twofactor_backupcodes updatenotification user_ldap user_status viewer weather_status workflowengine"

for rep in $LIST

do

rm -rf "/mnt/$rep"

done

fi

umount /mnt

done

The duration of the operation depends on the volume to be transferred.

Adjusting the Nextcloud configuration

The nextcloud configuration “config.php” from the existing installation is now copied to the GlusterFs “nextcloud-config” volume. Edit this file by replacing the value of the ‘datadirectory’ property with “/var/www/html/data” which is the standard configuration of the Docker image made by Nextcloud :

<?php

$CONFIG = array (

[...]

'datadirectory' => "/var/www/html/data"

[...]

Database

Nothing to do in my case, the database remains in place on the current database server. I remind you that my Nextcloud server does not host the “postgres” database, it is already installed on another server.

Preparation of the Postgres database server

The server firewall and the pg_hba.conf file must allow access to all the “Workers” of the Kubernetes cluster. For postgres it is generally the port 5432 which must have a rule: INPUT Allow 5432/TCP (all IP addresses of the workers). For the pg_hba.conf, you have limited the access to the Nextcloud database to a single machine, to indicate several machines to act on the subnet mask (subnet.ninja-subnet-cheat-sheet), or add as many lines as necessary:

- a complete network - - Warning this enlarges the attack area

host nextcloud nextcloud 172.28.4.0/24 md5

or as many lines as hosts allowed to join the database (best choice), example here with 3 workers :

[...]

host nextcloud nextcloud 172.28.4.72/32 md5

host nextcloud nextcloud 172.28.4.73/32 md5

host nextcloud nextcloud 172.28.4.74/32 md5

[...]

To apply the changes, do not restart the Postgres service (restart) in order not to “stop production”, use the “reload” parameter: … systemctl reload postgresql …

Creation of the manifest

The protest will be composed of :

- 1 namespace : nextcloud

- 1 gluster service

- 1 gluster endpoint

- 3 Persistent Volumes : These are the accesses to our 3 Glusterfs volumes

- 1 deployment

- 1 network service allowing access to the nextcloud application such as: NodePort

File to create : “manifest-nextcloud.yaml” on the master node (kubectl command),

Adjust values :

- IP address of the glusterfs server]

- Name of the volume gluster fs for nextcloud data] [Name of the volume gluster fs for nextcloud data].

- Name of the volume gluster fs for nextcloud apps] [Name of the volume gluster fs for nextcloud apps].

- Name of the volume gluster fs for nextcloud config] [Name of the volume gluster fs for nextcloud config].

apiVersion: "v1"

kind: "Namespace"

metadata:

name: "nextcloud"

labels:

name: "nextcloud"

---

apiVersion: "v1"

kind: "Service"

metadata:

name: "glusterfs-cluster"

namespace: "nextcloud"

spec:

ports:

- port: 1

---

apiVersion: v1

kind: "Endpoints"

metadata:

name: "glusterfs-cluster"

namespace: "nextcloud"

subsets:

- addresses:

- ip: [glusterfs server IP address]

ports:

- port: 1

---

apiVersion: "v1"

kind: "PersistentVolume"

metadata:

name: "gluster-pv-[Name of the volume gluster fs for nextcloud data]"

namespace: "nextcloud"

spec:

capacity:

storage: "400Gi"

accessModes:

- "ReadWriteMany"

storageClassName: "[Name of the volume gluster fs for nextcloud data]"

persistentVolumeReclaimPolicy: "Recycle"

glusterfs:

endpoints: "glusterfs-cluster"

path: "[Name of the volume gluster fs for nextcloud data]"

readOnly: false

---

apiVersion: "v1"

kind: "PersistentVolume"

metadata:

name: "gluster-pv-[Name of the volume gluster fs for nextcloud apps]"

namespace: "nextcloud"

spec:

capacity:

storage: "1Gi"

accessModes:

- "ReadWriteMany"

storageClassName: "[Name of the volume gluster fs for nextcloud apps]"

persistentVolumeReclaimPolicy: "Recycle"

glusterfs:

endpoints: "glusterfs-cluster"

path: "[Name of the volume gluster fs for nextcloud apps]"

readOnly: false

---

apiVersion: "v1"

kind: "PersistentVolume"

metadata:

name: "gluster-pv-[Name of the volume gluster fs for nextcloud config]"

namespace: "nextcloud"

spec:

capacity:

storage: "4k"

accessModes:

- "ReadWriteMany"

storageClassName: "[Name of the volume gluster fs for nextcloud config]"

persistentVolumeReclaimPolicy: "Recycle"

glusterfs:

endpoints: "glusterfs-cluster"

path: "[Name of the volume gluster fs for nextcloud config]"

readOnly: false

---

apiVersion: "v1"

kind: "PersistentVolumeClaim"

metadata:

name: "glusterfs-claim-[Name of the volume gluster fs for nextcloud data]"

namespace: "nextcloud"

spec:

accessModes:

- "ReadWriteMany"

storageClassName: "[Name of the volume gluster fs for nextcloud data]"

resources:

requests:

storage: "400Gi"

---

apiVersion: "v1"

kind: "PersistentVolumeClaim"

metadata:

name: "glusterfs-claim-[Name of the volume gluster fs for nextcloud apps]"

namespace: "nextcloud"

spec:

accessModes:

- "ReadWriteMany"

storageClassName: "[Name of the volume gluster fs for nextcloud apps]"

resources:

requests:

storage: "1Gi"

---

apiVersion: "v1"

kind: "PersistentVolumeClaim"

metadata:

name: "glusterfs-claim-[Name of the volume gluster fs for nextcloud config]"

namespace: "nextcloud"

spec:

accessModes:

- "ReadWriteMany"

storageClassName: "[Name of the volume gluster fs for nextcloud config]"

resources:

requests:

storage: "4k"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: "nextcloud"

namespace: "nextcloud"

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: nextcloud

replicas: 1

template:

metadata:

labels:

app: nextcloud

spec:

containers:

- name: nextcloud

image: nextcloud

ports:

- containerPort: 80

volumeMounts:

- mountPath: "/var/www/html/config"

name: glusterfsvol-config

- mountPath: "/var/www/html/data"

name: glusterfsvol-data

- mountPath: "/var/www/html/custom_apps"

name: glusterfsvol-apps

volumes:

- name: glusterfsvol-config

persistentVolumeClaim:

claimName: glusterfs-claim-[Name of the volume gluster fs for nextcloud config]

- name: glusterfsvol-data

persistentVolumeClaim:

claimName: glusterfs-claim-[Name of the volume gluster fs for nextcloud data]

- name: glusterfsvol-apps

persistentVolumeClaim:

claimName: glusterfs-claim-[Name of the volume gluster fs for nextcloud apps]

---

apiVersion: v1

kind: Service

metadata:

name: "nextcloud"

namespace: "nextcloud"

spec:

type: NodePort

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: "nextcloud"

apply this manifest :

kubectl apply -f nextcloud-deployment.yaml

# deployment.apps/nextcloud created

Check that the container starts : (I advise you to reread this document : Install Kubernetes cluster if you don’t have automatic completion “kubectl”

kubectl get pod -n nextcloud <touche tab>

# The end of the log indicates the status of the pod, wait a few minutes for a complete start...

# repeat the previous command, until complete startup : READY 1/1

NAME READY STATUS RESTARTS AGE

nextcloud-6759b5df47-blqbk 1/1 Running 0 5d23h

Exhibition

Note that the manifest includes the “nextcloud service” and allows to expose this application outside the cluster (NodePort type).

To discover this port :

kubectl get service -n nextcloud nextcloud

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nextcloud NodePort 10.107.203.125 <none> 80:31812/TCP 63d

To reach this new service see the procedure Expose services with a HAPROXY server

Scaling NextCloud (Horizontal scaling)

Concerning the scaling step (here horizontal scaling, i.e. two web servers using the same resources), a Redis service is needed and some modifications have to be made to the configuration of the “K8s deployment”. Nextcloud has chosen to store the sessions in a Redis service, which is faster than an implementation in the Postgres database.

Installation of a Redis server

I chose to install it on the database server, not very loaded, see the documentation:

Setting up two replicas on two different workers

Here, I will add the notion of replicas, i.e. the number of pods necessary for the proper execution of your plan. For my part, I’ll divide it between two workers. So we will also see the notion of NodeSelector.

To apply these modifications :

- You start from the manifest created previously and then execute the command :

kubectl apply -f nextcloud-deployment.yaml

OR you change the “deployment” directly with the kubectl “edit” parameter :

kubectl edit deployments.apps -n nextcloud nextcloud

[ESC:wq]

# Kube applies the changes immediately or returns an error

Notion of replicas

Manifest file: node /spec (root), add “replicas: [number of replicas]”

[...]

spec:

replicas: 2

[...]

Notion affinity (NodeSelector)

Attribute node spec/template/spec added affinity that allows to start a pod on a server already ’equipped’ with the started container :

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nextcloud

topologyKey: kubernetes.io/hostname

[...]

Nexcloud part

This information comes from the Nextcloud documentation. To start a Nextcloud container that needs to use session storage, Nextcloud uses the Redis service. You must therefore provide, by means of environment variables, the information necessary to connect to the Redis service. By default, Nextcloud stores sessions both as files and locally (i.e. in each container). When you want to scale, nextcloud uses Redis as the only storage point for sessions. This allows you to stay authenticated when the load balancer decides to direct you to a server, other than the one that provided you with the authentication session.

Manifest file : node spec/template/spec/containers

[...]

containers:

- env:

- name: REDIS_HOST

value: [IP addres or Redis server FDQN]

- name: REDIS_HOST_PORT

value: "6379"

- name: REDIS_HOST_PASSWORD

value: [Access password to the Redis service]

[...]

Warning I made it simple: good practice prevents the storage of “clear” passwords in configurations, to make the configuration more secure, see the notion of “Kubernetes secrets”.

After applying the modifications, the two pods start well on two different workers, the Haproxy server already knows the two nodes, it only remains to check, on the Haproxy server, that the traffic passes well on the two servers (my haproxy config specifies a “roundrobin” type balancing).

Image version level Docker nextcloud

If you are scaling this application horizontally, change the name of the Docker image, indicating a specific version number, for example: nextcloud:20.0.7. The configuration I gave above, allows you to check that there is not a more recent version in the Docker Hub repository. This is useful when you have only one POD. When nextcloud warns of a version upgrade, just stop the POD, K8s will automatically restart it with the new version of Nextcloud.

When restarting one of the nextcloud containers, the server worker will fetch the latest version from the DockerHub repository, and in case you have replicas, the other containers will continue to use the old version.

You will very quickly be confronted with a version conflict between the running containers, with a “upgrade” request on one side, then a “downgrade” on the other, just after the new container has executed the “upgrade”!!

Problems encountered

Glusterfs

- The gluster mount point shows only a part of the expected files: you have not copied the files through a Glusterfs client The solution is here